Do you want to create your virtual influencer? Do you want to use this influencer in your business’s advertising campaigns or grow it on social media to make money? In this article, we aim to answer the question: How to create a virtual influencer?

We will review multiple solutions, from simple and cost-effective to the most advanced and professional.

A virtual influencer is a digital character designed to interact with online communities, blending lifelike visuals with captivating stories to engage followers. While the technology is impressive, their true power comes from their ability to humanize a brand through storytelling and personality.

These computer-generated characters, who might act like real humans at first glance, are powered by advanced technology and have rich, well-crafted backgrounds.

They have so many uses and benefits for brands and businesses, and of course, anybody can create virtual influencers for various purposes.

Automating a virtual influencer via artificial intelligence is a game-changing idea. It offers businesses a fresh, innovative way to interact with audiences while eliminating the high cost and unpredictability of human influencers.

Let’s start with solutions.

Virtual Influencer Development Solutions

We assume that the virtual influencer should have a visual face or image and is not just a Character without an image like a bot in social media publishing textual content.

Generating Images with AI

The easiest and most affordable way to create a digital influencer is by generating images with AI.

All you need to do is imagine your influencer’s character—how they should look, their specific visual traits, and the style you’re aiming for. Once you have a clear idea, you simply provide the AI with a description or a style reference, and it generates an image based on your input.

AI models like DALL-E, Flux, or Imagen can quickly transform a text prompt into a high-quality image.

This makes the process incredibly simple and accessible, allowing you to create visually striking character portraits in just a few steps.

However, this method has significant limitations and challenges: it only generates static images of a character from a trained AI model. While these high-quality pictures can be shared on social media, they are just Simple characters with little application. We will explain in the next sections.

If your goal is to build a true virtual influencer, just having character pictures isn’t enough. A real influencer—whether human or digital—is more than just a face or simples images.

They have a unique story, engage with their audience, share snippets of their daily life, post videos, talk about their interests, and even interact with their followers through messages.

This level of storytelling and engagement makes a virtual influencer feel real and relatable.

Unfortunately, AI-generated images alone won’t achieve this. While they offer a quick and affordable way to visualize your character, they lack the depth, strategy, and interaction that define an influencer.

This article will explore additional challenges and alternative solutions that can help bring your virtual influencer to life.

Creating Content with Digital Character Generators

The second solution takes things a step further. Instead of generating standalone images with prompts each time, you can build a character or model using AI and use that as a base for producing both images and videos.

Here’s how it works: First, you provide the tool with at least 5 images of a real person or a fictional character—this could even be an AI-generated character from the first solution. The AI then analyzes these images, creates a model based on them, and saves it as a target character.

Once your character is set, you can generate new images or videos simply by giving different prompts. Since the AI now understands the character’s fixed traits, you won’t need to repeatedly describe their hair color, facial features, or other details. The model ensures consistency across all generated content.

The key advantage of this approach over the first solution is that you’re not just creating random images every time—you have a structured, recognizable character. This allows for more control, continuity, and customization, making your virtual influencer feel more real and consistent across different content.

Once the character has been developed, you no longer need to describe their appearance every time. Instead, you simply provide details about their actions, clothing, or setting, and the AI will generate the image or video accordingly.

For example, a prompt could be as simple as, “Alex is playing tennis and wearing a red shirt. He’s hitting the tennis ball.”

Since the AI already knows what Alex looks like, it focuses on the new details without requiring a full description of his facial features or hair color. This makes the process much more efficient and ensures consistency across different visuals.

That said, as of 2025, many of these tools are still in development. While they show promising results, they don’t yet meet expectations in terms of quality and consistency. However, we can expect even better and more refined outcomes in the coming years with continued advancements.

Deepfaking a Face or the Whole Character

The third solution involves using deepfake technology to bring your virtual influencer to life.

Deepfaking is an AI-driven technique that creates fake videos, images, or even voice recordings by replacing a person’s face or voice with another. Unlike simple photoshopping or copy-pasting, deepfakes use deep learning to seamlessly alter facial features, making them nearly indistinguishable from a real human to the naked eye.

For example, we can replace Brad Pitt’s face with anybody else!

At its core, deepfake technology primarily focuses on modifying faces while keeping the rest of the image or video relatively unchanged. However, it can also be used to generate entirely new faces by analyzing a dataset of different people and synthesizing someone who doesn’t exist. This makes it a viable method for creating images and videos of a virtual influencer.

That said, deepfaking still comes with challenges. AI limitations can lead to robotic movements, inconsistent backgrounds, awkward facial expressions, poor lip-syncing, and an overall unnatural feel.

Since AI still struggles with fully understanding context and human motion, the results might not always be convincing. While deepfakes offer an exciting way to animate virtual influencers, they require refinement and careful execution to avoid uncanny or unrealistic outcomes.

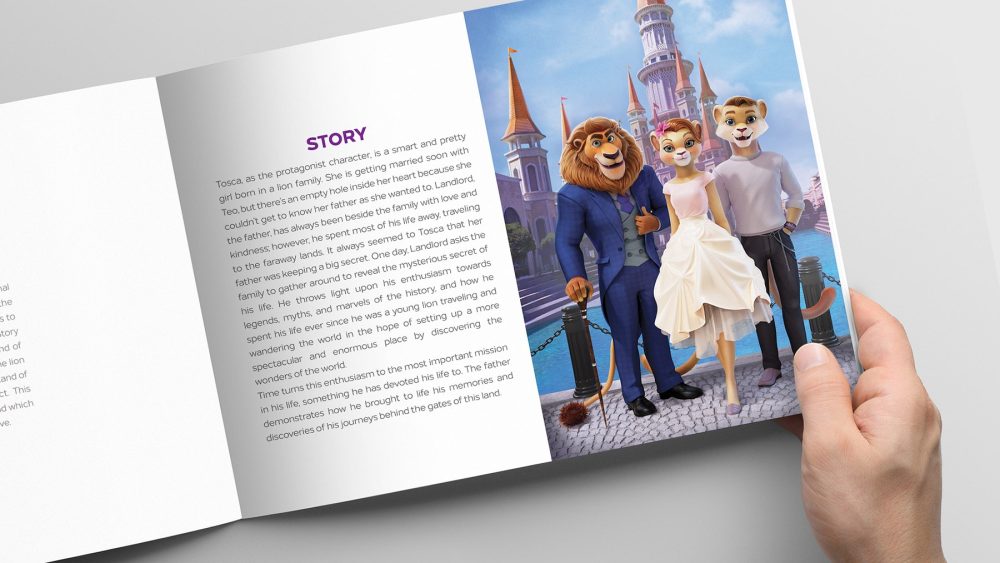

3D Modeling—Dubbing and CGI Production

CGI (Computer-Generated Imagery) is widely used to create highly realistic images, especially in movies and video games. It allows for the creation of digital characters that look natural and blend seamlessly into their environments.

A key part of CGI is 3D modeling, where a character (or any object) is built in three dimensions. Unlike flat images, a 3D model can move, interact, and appear more lifelike. This makes it an ideal choice for creating a virtual influencer that looks and behaves realistically.

These technologies are commonly used in industries like film, gaming, and architecture, but they can also play a crucial role in designing and animating digital influencers. At Dream Farm Agency, we used this technology to create 3D characters or virtual mascots. Look at our works.

3D models allow a virtual influencer to have smooth movements, realistic expressions, and greater flexibility across different contents.

This production involves talented artists and other professionals as well as powerful computers and efficient software like: Blender, Autodesk Maya, 3ds Max and ZBrush.

Let’s walk through an example. Have you seen The Curious Case of Benjamin Button?

In the film, Brad Pitt’s character, Benjamin Button, was digitally aged and de-aged using advanced CGI. Through 3D modeling, the filmmakers were able to create highly realistic aging effects—transforming him from an elderly man to a child while maintaining his distinct facial features at every stage.

The same techniques can be applied when modeling a virtual influencer. The process typically starts with a 2D design of the character or an existing image as a reference. From there, the character is transformed into a 3D model, allowing for lifelike movements, facial expressions, and interactions—just like what was done with Benjamin Button in the movie.

Before moving to animating the 3D model, there are a few more steps to take:

- Rigging: Rigging is adding a skeleton to the virtual influencer model so it can be posed and animated. It also includes controls for facial expressions and body movements.

- Texturing: Textures are added to the 3D model of the influencer to give it realistic details like skin, clothes, and surfaces. This includes mapping 2D images (textures) onto the 3D model’s surfaces.

- Shading: Shading adds depth and realism to the influencer by adjusting how light interacts with its surfaces. It makes the character look less flat and more lifelike.

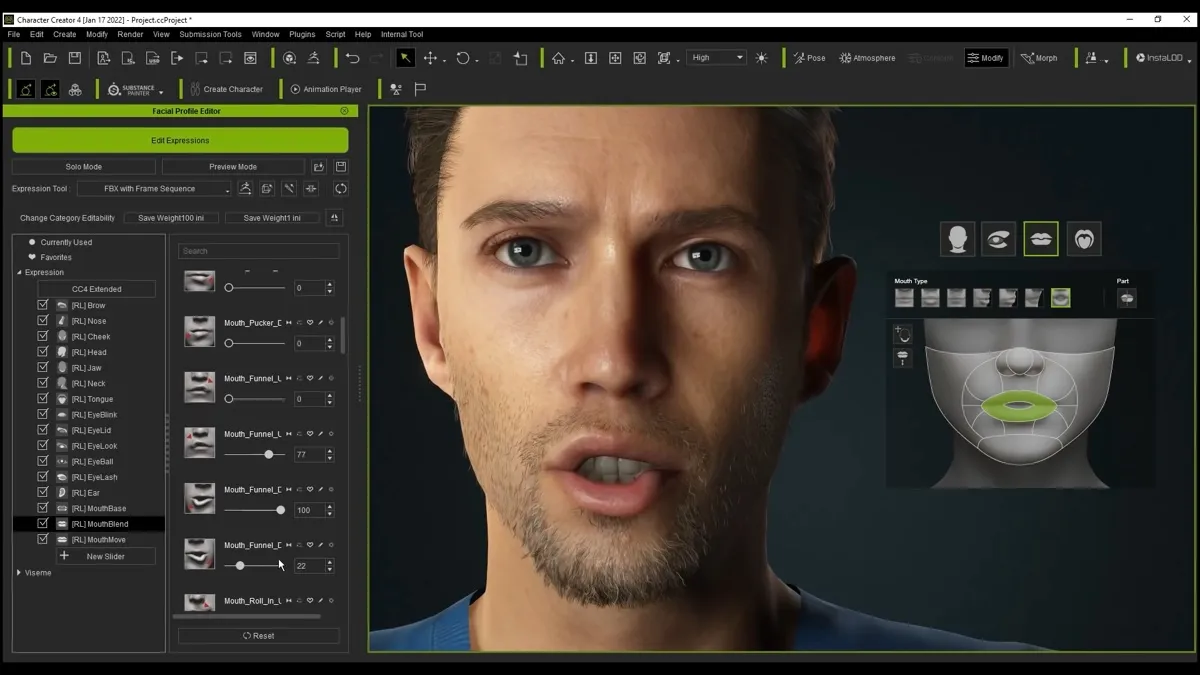

Animating the 3D model

So, animation is defined as a graphic technique that uses motion to bring the virtual influencer to life.

Animating the 3D model is not simple!

- The 3D character animation process begins with blocking, where key poses and movements are established.

- After that, animators move on to splining, smoothing out the animation by adding in-between frames for fluid motion.

- Once the movement is more refined, the animation enters the polishing phase, where timing and subtle details are fine-tuned.

- Secondary animation adds extra layers, like the movement of clothes or hair, to make everything feel more dynamic.

- If the character needs to express emotions or talk, facial animation brings the character’s face to life.

- Finally, the animator goes through refining & tweaking to fix any unnatural movements before the animation is ready for final rendering, where all elements come together for the finished scene.

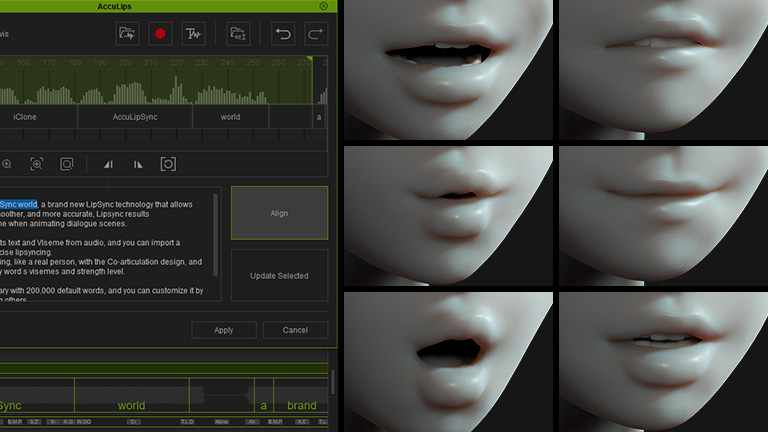

Dubbing with CGI

CGI allows us to sync facial expressions with recorded voice, ultimately achieving realistic lip-syncing. The voice can either be AI-generated or performed by a real actor, depending on the desired level of authenticity.

To make the virtual influencer even more lifelike, several steps are involved in the process.

One key technique is motion capture (mo-cap), which records facial movements and maps them onto the 3D model. This ensures that expressions and lip movements align naturally with the voiceover, creating a more realistic and engaging result.

Video Production

Creating virtual influencer videos involves using references, movements, animations, assets, and environments—but it’s not as simple as it sounds. Or, with the help of AI, it could be!

Traditionally, one approach is to film a real-world setting and then incorporate the 3D-modeled and animated virtual influencer into it. This process relies on CGI and compositing, a technique that blends computer-generated characters with real-world footage to make them appear naturally integrated into the environment.

With advancements in AI and CGI, this process is becoming more seamless, making it possible to create highly convincing digital influencers that feel like they truly exist in the real world.

Connecting 3D Model to AI content creation tools

In this solution, the virtual influencer is created manually, and AI-integrated applications are then used to generate videos based on prompts.

The process starts with designing a digital character (like Alex) using 3D modeling software or AI image generation tools. This step defines the character’s appearance, personality, and key traits, ensuring consistency across different visuals.

Once the character is ready, AI-powered platforms can generate videos or images based on it. You simply provide the AI with the character model as a reference and a prompt, such as “Alex is playing football.” The AI then processes this input and produces a video or image of Alex performing the action.

This method combines manual character creation with AI automation, offering more flexibility and control while simplifying content production.

Now, let’s discuss what are the challenges of each approach and solution.

Challenges

Creating a virtual influencer is no easy task and comes with various challenges. At Dream Farm Agency, we have encountered most of these challenges and have developed solutions to address them.

Character Consistency

Just like a real human influencer, a virtual influencer needs to stay consistent throughout videos and images, with only minor changes like clothing or makeup.

Consistency is key to making the character feel real and engaging. If the influencer’s appearance, movements, or overall style keeps changing, it disrupts the viewer’s experience and makes the content feel less authentic. People connect with characters that feel stable and familiar, so maintaining that consistency is crucial for keeping the audience interested and invested.

While AI image-generating tools can create stunning visuals of a virtual influencer, they struggle with maintaining consistency across different images. A slight change in the prompt can result in a completely different-looking character, making it difficult to sustain a cohesive and recognizable identity over time.

Deepfake technology faces similar challenges. Frame-by-frame variations can cause inconsistencies in the character’s appearance, especially when placed in different environments or interacting with other objects and people. These inconsistencies can break immersion and reduce realism, making it harder to maintain a stable, believable virtual influencer across longer videos.

Character movement and facial expressions

When a virtual influencer moves naturally and expresses emotions through facial expressions, they feel more human-like and relatable. This helps build a stronger emotional connection with the audience, making interactions feel more real and engaging.

However, if the virtual influencer lacks smooth movements or expressive facial reactions, they can come across as robotic and disconnected. Without these natural elements, the character might feel flat or lifeless, making it harder for the audience to stay interested.

While deepfakes can be incredibly convincing for still images and close-up shots, they struggle when it comes to animating body movements. The AI often fails to create fluid, natural motion, especially when the character interacts with their environment or moves in complex ways. The result can feel stiff, awkward, or even out of sync, with the risk of the character’s body appearing to “float” unnaturally. This makes it difficult to maintain realism over longer video sequences.

Facial expressions in deepfakes also pose a challenge. Sometimes, emotions look too flat, lacking the subtle variations of real human expressions. Other times, they appear exaggerated, making the character seem overly dramatic or unnatural. Even small misalignments—such as certain facial features not moving correctly or staying frozen—can create an eerie, unsettling effect. These inconsistencies break the illusion of realism, making the character feel more robotic than human.

Live Interaction and Real-Time Connection with the Audience Challenge

A virtual influencer feels more engaging and relatable when they can respond instantly to comments—especially during a live stream. For example, if a viewer asks about the influencer’s latest outfit, a real-time response makes the interaction feel more personal and authentic.

On the other hand, if the influencer can only post pre-recorded content, like scripted videos, the experience becomes less dynamic. Imagine watching a live stream where the influencer doesn’t acknowledge any comments—it would feel distant and disconnected, making it harder for the audience to stay engaged.

Creating a virtual influencer using digital character generators comes with major limitations, particularly when it comes to real-time interaction. These tools are primarily designed for generating static images or pre-recorded videos, meaning they struggle with responding to multiple inputs at once or adapting to changes on the fly.

One of the biggest challenges is synchronizing movements with voiceovers—often, the character’s gestures and facial expressions don’t align perfectly with their speech, making them feel unnatural. Since this technology isn’t yet optimized for live, dynamic interactions, achieving a truly fluid and responsive virtual influencer in real time remains a significant challenge.

High-Quality Video Creation Challenge

Viewers expect high-quality content, whether it’s from a human or a virtual influencer. When scrolling, people naturally gravitate toward visually striking, well-produced videos. For a virtual influencer, this presents both an opportunity and a challenge—while the digital nature allows for stunning visuals, any drop in quality can break immersion and make the content feel less engaging. Since virtual influencers already rely on computer-generated visuals, maintaining a high standard is essential to keeping the audience interested and making the experience feel real.

Deepfakes excel at face-swapping, but their biggest weakness is in simulating realistic interactions with the environment and adapting body movements. Since deepfake technology primarily focuses on facial features, it struggles with body posture, hand movements, and natural shifts in motion. When it comes to complex or dynamic actions, deepfakes lack the fluidity and realism that viewers expect.

This limitation makes it difficult to create lifelike videos where the character moves around, interacts with objects, or engages with other people in a believable way. Without smooth and cohesive body movement, deepfake-generated influencers risk feeling artificial, reducing their ability to truly connect with an audience.

Creating a Unique Identity and Story Universe for the Character

Creating a unique identity for a virtual influencer extends beyond just their appearance; it encompasses their personality, style, voice, actions, and how they engage with their audience. Take a look at your Instagram and consider the real influencers you follow. Why did you choose to follow them? Perhaps it’s because they offer valuable insights or because they are entertaining. Each of them has a distinct narrative that forms their universe, and if this element is overlooked, they may come across as unappealing and boring.

A well-developed character has depth and story, making them memorable and relatable.

A strong identity can come from a distinct sense of humor, the topics they engage with, or how they interact with comments and trends. Without these traits, the character may feel generic, failing to capture the audience’s attention. If nothing makes them stand out, viewers will simply scroll past. It’s the unique quirks and traits that keep people coming back for more.

If the goal is to build a truly one-of-a-kind virtual influencer, character-based content generation tools aren’t the right mechanism. Before that, we need a strategy and story universe for the character.

Matching Emotions and Personalities

If a virtual influencer’s emotions and personality aren’t aligned, they can feel unnatural and disconnected. For example, if a character who is usually happy and energetic suddenly appears sad in an unrelated situation, the audience might feel confused and struggle to connect. Likewise, if a virtual influencer’s facial expressions remain static and never change, the character comes across as stiff and lifeless—more like a robot than a real personality.

Deepfake technology, while impressive at manipulating facial appearances, doesn’t allow for dynamic personality evolution. It works by replicating pre-existing traits from its training data, meaning the emotions and personality of a deepfake character are typically fixed. This makes it difficult for the character to adapt naturally to different situations.

Since deepfakes don’t build a personality in real-time, they lack depth, growth, and adaptability in their interactions. Emotional responses tend to feel repetitive or unnatural, which limits their ability to react to new situations in a believable way. This lack of flexibility makes deepfakes less effective for creating characters that need to evolve or maintain a dynamic presence over time.

Cost of Resources and Technical Knowledge

Keep in mind that all the solutions mentioned come with costs, and the amount you’ll need to invest depends on your specific needs and expectations.

Both 3D modeling and CGI-style production combined with AI content creation tools require significant computational power, especially when advanced techniques like motion capture or 3D animation are involved. These processes demand high processing capacity to generate realistic, high-quality results.

The complexity of modeling, animating, and integrating AI-based tools makes powerful hardware and software essential. High-quality animations, seamless AI integration, and detailed character designs require robust systems to handle the workload efficiently.

Without sufficient computing resources, the output quality may suffer, leading to slower processing times and a higher risk of glitches or errors. To maintain efficiency and realism, investing in strong computational infrastructure is crucial for producing smooth, high-resolution content without unnecessary delays.

From the perspective of knowledge and expertise, it’s important to note that if you choose AI-based tools that offer easy outputs, you may not need advanced technical skills. However, you should still consider the other challenges mentioned. Moreover, these platforms can be quite expensive.

Complex Environments

Imagine a virtual influencer who always appears against the same plain white background—video after video. Pretty dull, right? Real influencers, like actual people, interact with different environments—they travel, shop, hang out in new places, and bring variety to their content. You’d expect a virtual influencer to do the same. Sticking to the same static backdrop over and over again makes them feel more like a stagnant image than a dynamic, relatable personality. It removes that sense of adventure and realism that keeps an audience engaged.

Deepfake technology, while great at face-swapping, isn’t designed to seamlessly integrate characters into complex environments. It struggles with creating realistic backgrounds or natural interactions between a character and their surroundings.

When you try to place a deepfake character in a more complex setting or have them interact naturally with their environment, things often feel off. Deepfake AI doesn’t fully understand how to merge the character with the scene, leading to disjointed, unnatural results—where the influencer may look out of place or fail to blend realistically with the background. This lack of depth makes it harder for a deepfake-generated influencer to feel truly immersive and believable.

Syncing Voice and Movement (Lip Syncing)

When a person talks, their mouth moves in sync with their speech—we don’t even think about it, but we instantly notice when something’s off. If someone speaks through their teeth or barely moves their mouth, it feels unnatural and distracting, breaking the natural flow of communication.

The same applies to virtual influencers. If their mouth movements don’t match their speech, it creates a disconnect that makes them feel less real. We’re wired to expect that natural synchronization, and when it’s missing, it becomes difficult to fully engage with the character.

Deepfake technology, which manipulates existing video or images, struggles with natural voice and movement synchronization. Since deepfakes primarily focus on facial alterations, they lack advanced systems that smoothly coordinate lip movements, body language, and voice.

For example, lip-syncing often looks slightly off because the software doesn’t always capture the precise timing of speech or align it perfectly with facial expressions. This mismatch can make the character feel awkward, robotic, or disconnected, reducing their believability and making interactions feel unnatural.

What are the best practices to create a virtual influencer?

As of 2025, when it comes to creating a virtual influencer, there are two main methods that guarantee high-quality results.

Creating a virtual influencer is one of our services. We can help streamline the process for businesses looking to bring their digital character to life.

Now let’s discuss two best practice approaches.

Traditional Method (3D Modeling + CGI)

This approach is the more hands-on process. First, you create a 3D model of your character, which involves designing its appearance and structure. Thereafter, you use CGI (computer-generated imagery) technology to bring the character to life by animating it and adding voiceovers.

This method takes more time and effort, but it gives you full control over the character’s look, movements, and voice, allowing for very detailed and customized results. This method is ideal if you require a highly refined character for a project where meticulous attention to detail is crucial.

AI-Driven Approach (AI Tools for Creation and Content)

The second method relies on AI technology to create the entire virtual influencer. This process uses a combination of different AI tools, which can generate the character’s 3D model, animate it, and even provide a synthetic voice for the character.

Making an AI influencer this way allows the AI to handle much of the work automatically, making this approach faster and more affordable. While the results can still be impressive, the level of control over each tiny detail isn’t as high as with the traditional method. However, for many creators, the speed and cost-efficiency of the AI-driven approach make it a great option, especially for those looking to produce content on a smaller budget or at a faster pace.

Final words

Virtual influencers are transforming the digital landscape, but which creation method fits your needs? Answering these questions requires a solid strategy, which you can find in the complete guide to virtual influencer marketing.

These are critical questions, and the right approach depends on your brand’s vision, goals, and budget. At Dream Farm Agency, we specialize in creating virtual influencers that align perfectly with your brand identity, ensuring consistency, realism, and audience engagement. Whether you’re just exploring the possibilities or ready to bring your digital persona to life, our team is here to guide you every step of the way.

Are you ready to build a virtual influencer who represents your brand and captivates and engages your audience? Let’s bring your vision to reality—reach out to Dream Farm Agency today!

Negar

Nice take on virtual influencers—simple, creative, and AI-driven!